convers.ations is an interactive audiovisual installation that addresses the general adverse attitude towards voice-enabled Artificial Intelligence (AI) systems that use speech-to-text technology (such as Apple’s Siri), caused by a lack of understanding of the operational characteristic behind the system. The installation aims to tackle this problem by making the operation of speech-to-text technology interpretable. It focusses on the linguistic model of the algorithm by presenting a rendition of textual output that is relatable and comparable for the audience. It is simple but immersive and enables the audience to, allusively, enter a black box of this technology. Ultimately, convers.ations invites the audience to engage with the properties of the algorithm and its contextual environments, isolated from the anthropomorphistic layer and commercial features and allows them to discover its functionality and enhance critical reflection on such AIs.

This installation was made in collaboration with Floor Stolk.

THE INSTALLATION

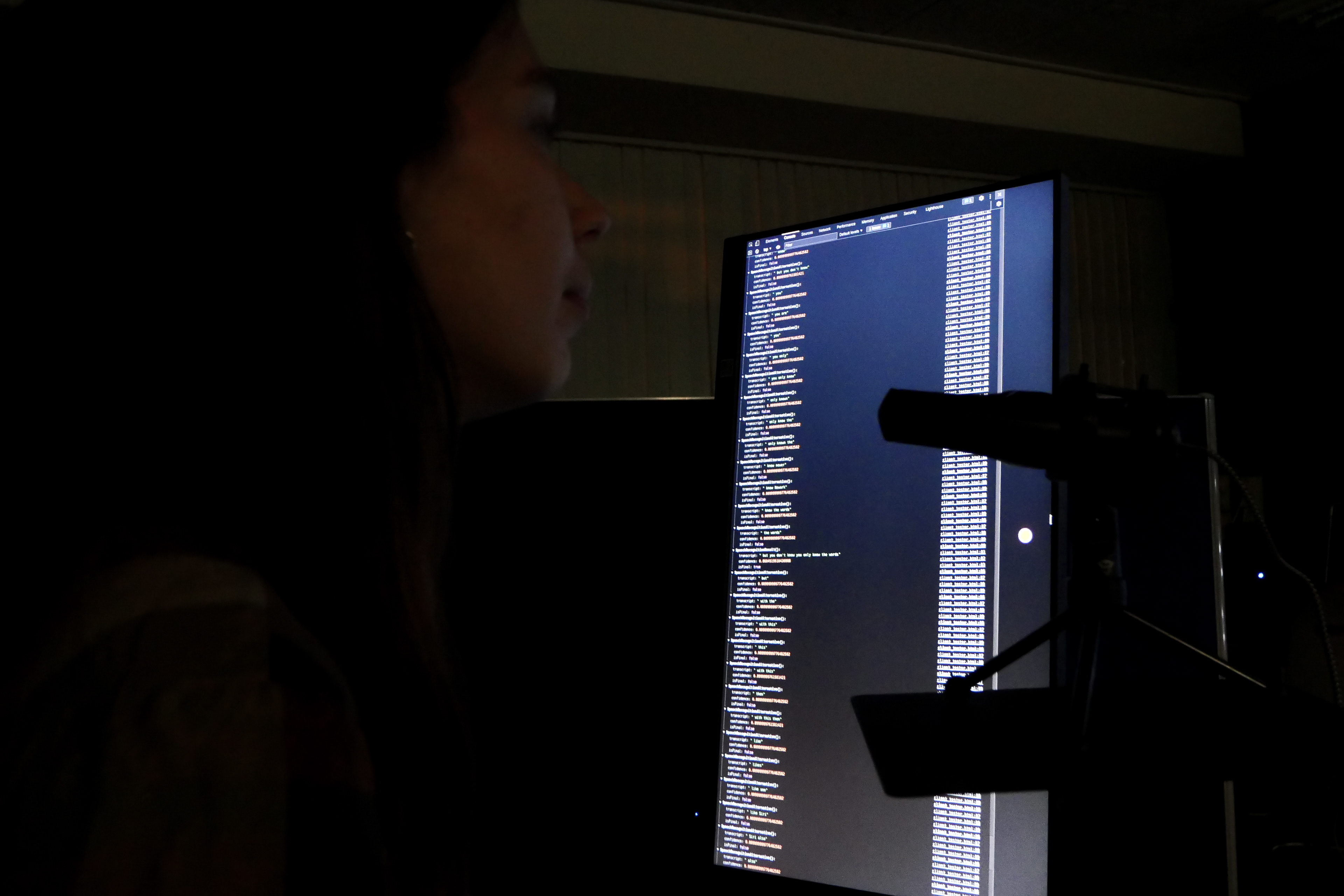

The installation provides an interactive data visualization that renders real-time speech-to-text data translated from the microphone placed in the installation space. The installation consists of an input console - a microphone and monitor, operated by a Raspberry Pi - and a display server – in the original set up a video wall - and is situated in an obscured space. In addition, surround speakers are placed around the installation to emit a soundscape of typical server room sounds to enhance the feeling of entering the black box of the technology.

Once inside, the audience can observe the display server with the latest processed text output of the algorithm alongside with confidence ratios. In the middle of the space the audience can find a microphone and computer monitor displaying direct intermediate results of the speech-to-text algorithm. The audience is invited to generate input for the system by speaking into the microphone. The intermediate results will be directly displayed in a log-format on the console monitor. Simultaneously, the display server will switch from visualization and dynamically display a new visualization of the interim results if speech is received.

THE INTERACTION

The audience can interact in two ways, either as an observer or as an active participant. The observer role allows the audience to explore previous processed interactions with the system, whereas the participant role allows direct interaction between human and machine.

When passing by the installation, the audience’s attention is captured by making use of the large visualization on the display server and the soundscape in the space. By allowing the passer-by to explore the installation from a distance, the intention to participate is encouraged. When decided to participate, the passer-by first takes on the role of observer: walking through the space and observing the visualization and interaction closer by.

The affordance of the placement of the microphone and input-console monitor in the room invite the observing audience to switch to the role of participant. The visibility of the relation between the text on the monitor and the microphone placed next to it, allows the participant to understand the interaction with very little mental effort, thus keeping the cognitive load low. By assuring that the cognitive load of the participation is low, the installation leaves enough cognitive capacity for interpretation and reflection on the properties of the technology.

IMMERSION

Besides the increase in interpretability of the system, the installation also enhances the tangibility of the AI. The installation gives the audience the experience of entering a black box by creating an immersive installation rather than just a visualization. This is achieved by placing the installation inside an obscured room and utilizing a surround soundscape of typical server-room sounds to additionally incorporate the auditory modality.

The physicality of the installation emphasizes the stark contrast between the abstract perception and the physical infrastructure of the technology. From the perception of the general public, the technology operates in the digital world, disembodied from the concreteness of the physical world they are living, and using this technology, in. The soundscape further enhances this experience as it implies that the computational process of speech-to-text AI does not happen on anyone’s phone but is outsourced to be transferred in a physical data center. Moreover, the physicality of the installation allows the audience to immerse themselves and participate in this technology. Instead of just observing and using this technology, like they are used to in general, they get the chance to conversance with the workability of the system in physical space.

THE TECHNOLOGY

For the installation, we integrated a commonly used, state-of-the-art, open-source API: Mozilla WebSpeech and made the procedures of the algorithm transparent by directly and continuously displaying intermediate results, which are usually hidden, to the general public and adversely using them as the base of our artwork. These insightful variables – transcript, confidence, and finality – are presented in real time on the console-monitor to the active participant, that is, if the API recognizes sound as speech via the microphone. Meanwhile, the data is also sent to the display server and is used to create an attractive textual data visualization.

The adaptation of the API is thus kept relatively simple, we deliberately just take the insightful variables. Such a simple adaptation is already sufficient to enable the audience to interpret the working of the system and thereby making the speech-to-text technology partially transparent. Furthermore, this simplicity is essential as it allows for meaningful explanation on what is happening with the data to both digital literate, and illiterate, audience, as strongly recommended by The Media & Technology Committee of AI4People.

Every variable that is being displayed represents how the system evaluates and transfers its calculations to generate the best possible result for the detected speech input. Moreover, this data assures the audience that the system processes speech in an entirely different way compared to a human brain. It confirms that, although it seems straight-forward, speech-to-text AI is not analogues to a human mind as is often considered by the general public.

In the end, convers.ations is based on technology similar to systems like Amazon’s Alexa or Apple’s Siri, without anthropomorphistic features and collateral obscurity. This is where the wordplay of the title becomes clear: by speaking to the system, the participant encounters the opposite (= converse) of how the technology is conveyed - a humanlike, seemingly intelligent, algorithm with a personality - resulting in a one-sided conversation against a computer that does nothing more than a bunch of statistical calculations.